One of the best ways to verify that CloudyCluster is ready to run your important research investigations, is to run it through one the Sample Jobs built into the standard image. Samples that we have included have small data sets or task expectations, which give you quick assurances that the environment is ready to handle larger, more demanding workloads.

The sample this article will walk through is using the Message Passing Interface or MPI and a very fast job to count the number of prime numbers between 0 and 25,000,000. The configuration we will review in a moment can of course be modified for the number of compute nodes and the number of tasks performed by each node, as well as the target number range. This job, referred to as MPIPrime can be scheduled to run via either Torque or Slurm, and will also provide confirmation that the Scheduler is ready to receive your job submissions, as well as giving you an opportunity to interact with CCQ, the meta-scheduler that handles job passing into a database and fed to the scheduler and on to the compute nodes as the jobs run.

Preparing the Sample Run

Once your CloudyCluster environment is ready to run, the first step is to copy the sample code to:

```

$ cp -R /software/samplejobs /mnt/orangefs/

```

Edit the job script to specify the Scheduler and the number and type of Compute Group nodes to be used, if desired, you can also choose which MPI tool you want to test:

```

$ cd /mnt/samplejobs/mpi/GCP/

$ sh mpi_prime_compile.sh

```

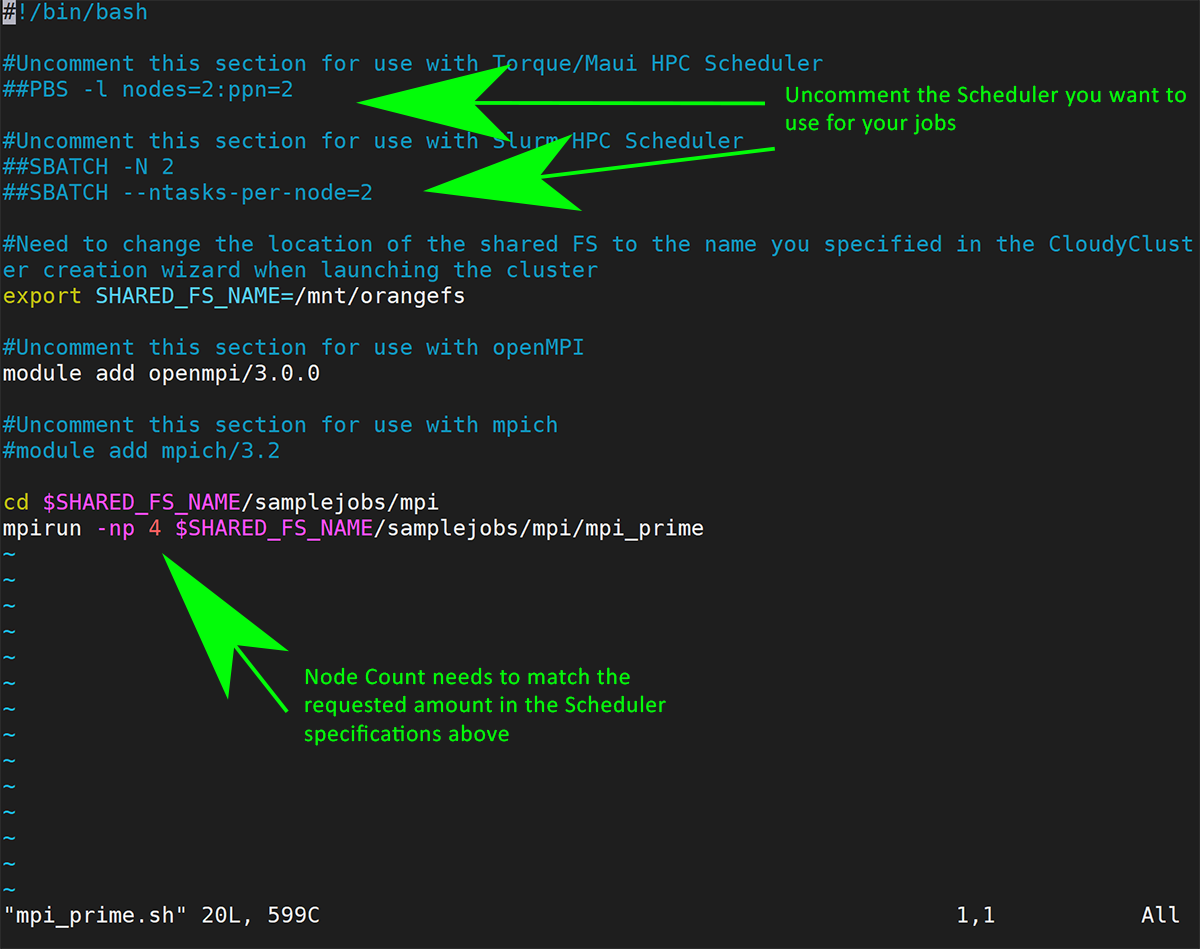

Next the job scripts within this directory require that the Scheduler designated during the environment setup (Torque or Slurm) be uncommented and saved. Using the text editor of choice (Vi, Vim, Nano), remove the # to enable the scheduler, see the sample below:

```

$ vim mpi_prime.sh

#!/bin/bash

#Uncomment this section for use with Torque/Maui HPC Scheduler

##PBS -l nodes=2:ppn=2

#Uncomment this section for use with Slurm HPC Scheduler

##SBATCH -N 2

##SBATCH --ntasks-per-node=2

```

For this example, we will be using Slurm, so the script will look like this:

```

#Uncomment this section for use with Slurm HPC Scheduler

#SBATCH -N 2

#SBATCH --ntasks-per-node=2

```

- note single # which enables the scheduler to recognize it.

If you renamed the OrangeFS node during setup, you will need to update this section to match the name you created.

```

#Need to change the location of the shared FS to the name you specified in the CloudyCluster creation wizard when launching the cluster

export SHARED_FS_NAME=/mnt/orangefs

```

The sample job, by default, is using OpenMPI, but if there is a desire to run and test mpich, this next section can also be modified to enable/disable the MPI of choice.

```

#Uncomment this section for use with openMPI

module add openmpi/3.0.0

#Uncomment this section for use with mpich

#module add mpich/3.2

```

Finally, ensure that the number of mpirun node processes called out in -np is equal to the number of nodes * the number of processes, in this case, 2*2 =

```

mpirun -np 4

cd $SHARED_FS_NAME/samplejobs/mpi

mpirun -np 4 $SHARED_FS_NAME/samplejobs/mpi/mpi_prime

```

Save and exit and run the mpi_prime_compile.sh script. This is completed almost instantaneously, and at the next command prompt, you can now submit the job script using CCQ:

```

$ ccqsub mpi_prime.sh

```

What to Expect Next

If CCQ validates the job is in order, you will get an immediate confirmation message containing a Job ID number associated with your submission.

```

$ ccqsub mpi_prime.sh

The job has successfully been submitted to the scheduler scheduler and is currently being processed.

The job id is: 319720 you can use this id to look up the job status using the ccqstat utility.

```

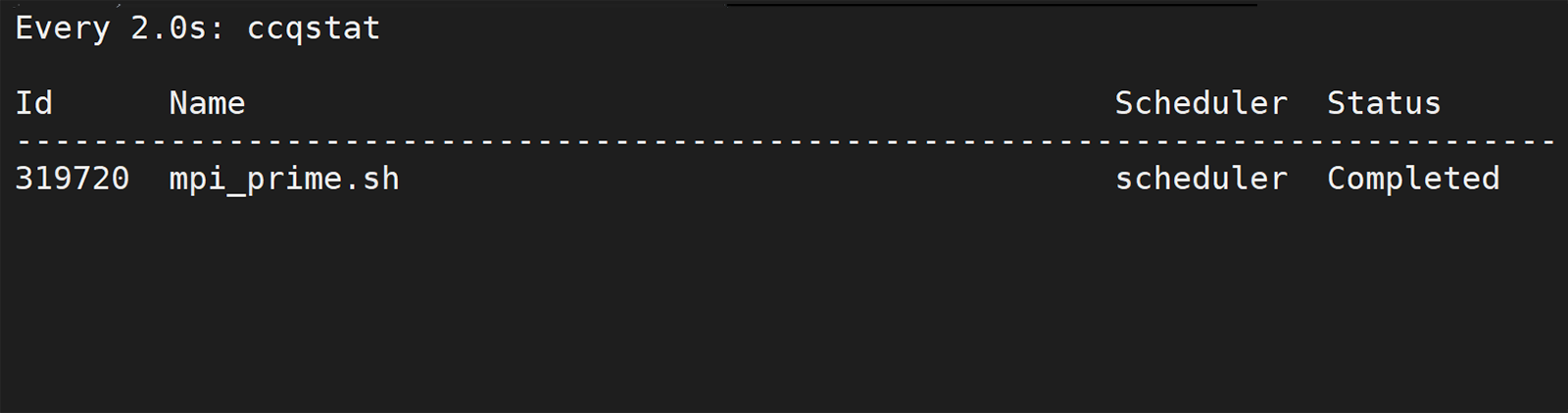

As noted in the message above, you may track the status of your job by using the CCQStat utility $ ccqstat for a snapshot of the jobs at that moment, or $ watch ccqstat for a more real-time visual notification of the jobs as they pass from state to state until completion.

Reviewing the Output

Once the job completes, CCQStat will give a Status of Completed:

The default output for CCQ Jobs unless otherwise noted is in the home directory.

```

$ vim mpi_prime.sh<<job_id>>.o

Using 4 tasks to scan 25000000 numbers

Done. Largest prime is 24999983 Total primes 1565927

Wallclock time elapsed: 4.05 seconds

```

Errors, if there were any, would be located in that same directory in the mpi_prime.sh«job_id».e file.

Congratulations on reviewing or completing this quick tutorial leveraging the CloudyCluster Sample Jobs to prepare for your next big run!